What you need to know

- Google Images on the web begins testing a “complex” query version of its search function.

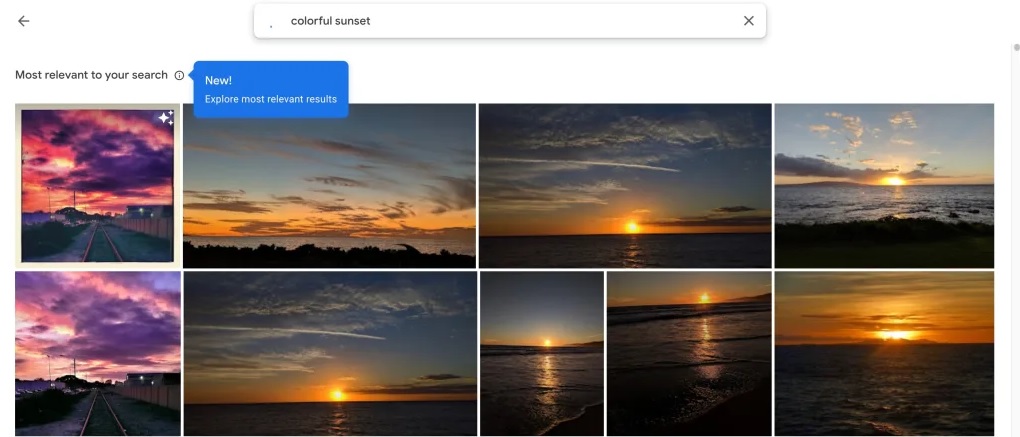

- Users can search for terms like “colorful sunset” and receive the most relevant results for that query.

- Likewise, the test shows that users can search for people who may have been tagged when associated with a specific place they may have been in.

Google Images appears to have begun testing a revamp of how the search function interacts with what users are searching for. According to 9to5Google, over the past few days, users have noticed that Google Images on the web presents a prompt that reads, “Try a more powerful search,” followed by an option to “Learn more.”

Google explains that users can try queries like “colorful sunset” or “Cinderella” along with other terms. During 9to5’s testing, a search for “colorful sunsets” in their image library returned several images that were “most relevant to your search.” Furthermore, images appeared based on their relevance to the query rather than by the date the image was taken.

Currently, Android devices have not been spotted with this new prompt, but that may change if the tests go well enough.

In addition, users may be able to get more specific information to find that elusive memory. More tests explored the ability to use the search for tagged faces (people) and location. An example of this is “Brianna in Grant Park”. Both variations of the search function within Google Images can be useful. Users can move from general queries about a topic to find any images that fit the description to more subtle finds of specific memories.