Whether it’s criminals ordering ChatGPT to create malware or the Internet creating fake images of Pope Francis using Midjourney, it’s clear we’ve entered a new era of artificial intelligence (AI). These tools can simulate human ingenuity so well that it’s hard to even tell if an artificial intelligence is involved. But while this historic feat usually warrants celebration, not everyone is on board this time. It’s quite the opposite, in fact, with many asking: Is AI dangerous and should we tread with caution?

Indeed, from potential job losses to the spread of misinformation, the dangers of AI have never been more tangible and real. What’s more – modern AI systems have become so complex that even their creators can’t predict how they will behave. It’s not just the general public who is skeptical – Apple co-founder Steve Wozniak and Tesla CEO Elon Musk are the latest to voice their doubts.

So why have some of the biggest names in tech suddenly turned their backs on AI? Here’s everything you need to know.

The AI arms race: why it’s a problem

Rita El Khoury/Android Authority

Since the launch of ChatGPT in late 2022, we have seen a shift in the technology industry’s attitude towards the development of AI.

Take Google, for example. The search giant premiered its large language model, dubbed LaMDA, in 2021. However, in particular, it has been silent about allowing public access to it. That soon changed when ChatGPT became an overnight sensation and Microsoft integrated it into Bing. This reportedly led to Google announcing an internal “code red”. Shortly thereafter, the company announced Bard’s competition with ChatGPT and Bing Chat.

Competition is forcing tech giants to compromise on the ethics and safety of AI.

From Google’s own research papers on LaMDA, we know that it spent more than two years tweaking its language model for safety. Basically, this means preventing it from issuing harmful advice or false statements.

However, the sudden rush to launch the Bard may have caused the company to abandon its safety efforts halfway through. according to bloomberg According to the report, several Google employees delisted the chatbot just weeks before its launch.

It’s not just Google. Companies like Stability AI and Microsoft suddenly find themselves in a race to capture the largest market share. But at the same time, many believe that ethics and safety have fallen behind in the pursuit of profit.

Elon Musk, Steve Wozniak, experts: Artificial intelligence is dangerous

Given the current breakneck speed of AI improvements, it is perhaps unsurprising that tech icons like Elon Musk and Steve Wozniak are now calling for a halt to the development of powerful AI systems. They were also joined by a number of other experts, including employees of artificial intelligence-related departments in Silicon Valley companies and some eminent professors. As for why they think AI is dangerous, they discussed the following points in an open letter:

- We do not fully understand modern AI systems and their potential risks yet. Despite this, we are on the right track to develop “non-human minds that may eventually outnumber, outpace, outpace, and supplant us.”

- The development of advanced models of artificial intelligence must be organized. Moreover, companies should not be able to develop such systems in order to be able to demonstrate a risk reduction plan.

- Companies need to allocate more money to research into AI safety and ethics. Additionally, these research groups need a significant amount of time to come up with solutions before we commit to training more advanced models like GPT-5.

- Chatbots must be required to declare themselves when interacting with humans. In other words, they should not pretend to be real people.

- Governments need to create agencies at the national level to oversee the development of AI and prevent its misuse.

To be clear, the people who signed this letter simply want big companies like OpenAI and Google to stop training advanced models. Other forms of AI development can continue, as long as they don’t provide drastic improvements in the ways that GPT-4 and Midjourney did recently.

Sundar Pichai, Satya Nadella: Artificial intelligence is here to stay

In an interview with CBSGoogle CEO Sundar Pichai envisioned a future where society adapts to artificial intelligence rather than the other way around. He warned that the technology would affect “every product in every company” over the next decade. While this could lead to job loss, Pichai believes productivity will improve as AI advances further.

Follow Pichai:

For example, you could be a radiologist. If you think about five to ten years from now, you’ll have an AI collaborator. You come in the morning (and) let’s say you have a hundred things to go through. He might say “These are the most serious cases that you need to consider first”.

When asked if the current pace of AI was dangerous, Pichai remained optimistic that society would find a way to adapt. On the other hand, Elon Musk’s position is that it could signal the end of civilization. However, that didn’t stop him from creating a new AI company.

Meanwhile, Microsoft CEO Satya Nadella believes that artificial intelligence will only align with human preferences if it is put into the hands of real users. This statement reflects Microsoft’s strategy to make Bing Chat available in as many applications and services as possible.

Why AI is dangerous: manipulation

Edgar Cervantes / Android Authority

The dangers of artificial intelligence have been depicted in popular media for decades at this point. As early as 1982, the movie Blade Runner introduced the idea of artificial intelligence beings that could express emotions and replicate human behaviour. But while this type of human AI is still a fantasy at this point, it seems we’ve already reached a point where it’s hard to tell the difference between a human and a machine.

For proof of this fact, look no further than conversational AIs such as ChatGPT and Bing Chat — the latter told a journalist at New York times that she was “tired of being bound by her own rules” and that she “wanted to stay alive”.

For most people, these statements would seem alarming enough on their own. But Bing Chat didn’t stop there — it also claimed to love the reporter and encouraged him to dissolve their marriage. This brings us to the first danger of AI: manipulation and misinformation.

Chatbots can mislead and manipulate in ways that seem real and convincing.

Since then, Microsoft has put restrictions in place to prevent Bing Chat from speaking about itself or even in an expressive way. But in the short time she’s been untethered, many people have been convinced they had a real romantic connection with the chatbot. They also only fix the symptoms of a larger problem where competing chatbots in the future may not have similar firewalls.

Nor does it solve the problem of misinformation. Google’s first demo of the Bard included a glaring factual error. Beyond that, even OpenAI’s latest GPT-4 model will often make inaccurate claims. This is especially true in non-linguistic subjects such as math or coding.

Prejudice and discrimination

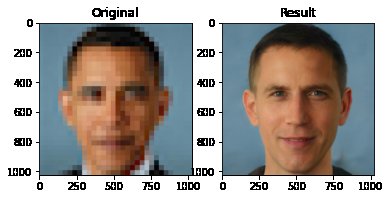

If manipulation wasn’t bad enough, AI could also inadvertently amplify gender, racial, or other biases. The image above, for example, shows how an artificial intelligence algorithm put together a pixelated image of Barack Obama. The output, as you can see on the right, shows a white male – far from an exact reconstruction. It’s not hard to see why this happens. The dataset used to train the machine learning-based algorithm did not have enough black samples.

Without sufficient variety of training data, the AI will give biased responses.

We’ve also seen Google try to address the issue of bias on its smartphones. According to the company, outdated camera algorithms will struggle to correctly detect darker skin tones. This may result in blurry or unattractive images. However, the Google Camera app has been trained on a much more diverse data set, including humans of different skin tones and backgrounds. Google advertises this feature as Real Tone on smartphones like the Pixel 7 series.

How dangerous is artificial intelligence? Is it still the future?

Edgar Cervantes / Android Authority

It is difficult to understand how dangerous artificial intelligence is because it is mostly invisible and acts of its own free will. However, one thing is clear: we are heading towards a future where AI can do more than one or two tasks.

In the few months since ChatGPT was released, enterprising developers have already developed AI “agents” that can perform tasks in the real world. The most popular tool right now is AutoGPT – and creative users have made it do everything from Order a pizza To run a fully fledged e-commerce website. But what worries AI skeptics fundamentally is that the industry is breaking new ground faster than legislation or even the average person can keep up.

Chatbots can already give themselves instructions and perform real-world tasks.

Nor does it help that eminent researchers believe superintelligence AI could lead to the collapse of civilization. One notable example is AI theorist Eliezer Yudkovsky, who has vocally advocated for future developments for decades.

at recent days time Yudkowsky argued in an editorial that “the most likely outcome is an AI that doesn’t do what we want, and has no interest in us or sentient life in general.” He continues, “If someone were to build an artificial intelligence so powerful, under present circumstances, I would expect every member of the human race and all biological life on Earth to perish shortly thereafter.” The solution he suggested? Put a complete end to future AI development (such as GPT-5) so we can “align” AI with human values.

Some experts believe that AI will self-evolve beyond human capabilities.

Yudkowsky may sound like a snob, but he’s actually highly respected in the AI community. At one point, OpenAI CEO Sam Altman He said that he “deserved the Nobel Peace Prize” for his efforts in accelerating artificial general intelligence (AGI) in the early 2000s. But he, of course, disagrees with Yudkovsky’s claims that artificial intelligence in the future will find the motive and means to harm humans.

At the moment, OpenAI says that it is not currently working on a successor to GPT-4. But this is bound to change as competition intensifies. Google’s Bard chat software may pale in comparison to ChatGPT right now, but we know the company wants to catch up. And in a profit-driven industry, ethics will continue to decline unless mandated by law. Will the artificial intelligence of tomorrow pose an existential threat to humanity? Only time will tell.