Using human and animal motion to teach robots to dribble a ball, simulate human figures to carry boxes, and play soccer

Five years ago, we took on the challenge of teaching a fully articulated human character to pass obstacle courses. This demonstrated what reinforcement learning (RL) can achieve through trial and error but also highlighted two challenges to the solution embodied Intelligence:

- Reusing Previously Learned Behaviors: A large amount of data was required for the agent to “get off the ground”. Without any preliminary knowledge of the force to be applied to each of its joints, the worker began with random tingling of the body and fell swiftly to the ground. This problem can be mitigated by reusing previously learned behaviors.

- Distinctive behaviors: When the agent finally learned how to navigate obstacle courses, it did so using unnatural (albeit amusing) movement patterns that would be impractical for applications such as robots.

Here, we describe a solution to both challenges called neural probabilistic primitive motor (NPMP), including directed learning using movement patterns derived from humans and animals, and discuss how to use this approach in the Humanoid Football paper, published today in Science Robotics.

We also discuss how this same approach enables whole-body human vision manipulation, such as a human body holding an object, and real-world robotic control, such as a robot dribbling a ball.

Data distillation into NPMP-controllable raw drivers

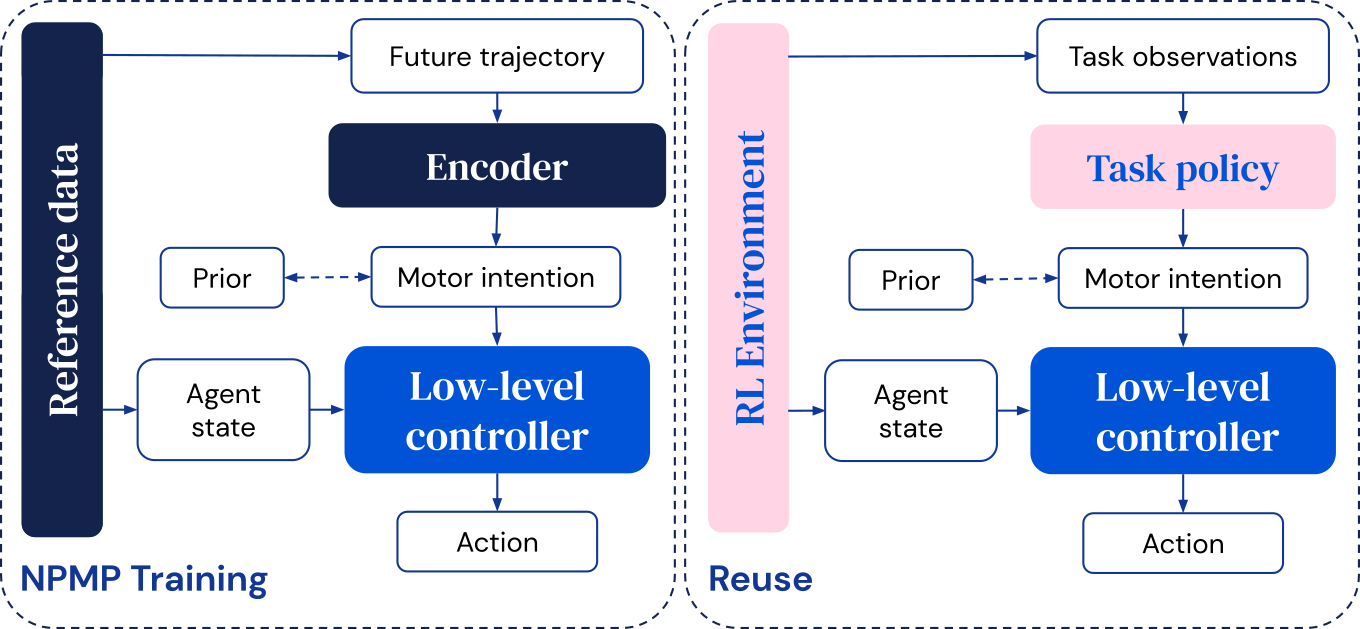

The NPMP is a general-purpose motor control module that translates short-horizon motor intentions into low-level control signals, and is trained offline or via RL by simulating motion capture (MoCap) data, recorded using trackers on humans or animals running gestures of interest.

The form consists of two parts:

- An encoder takes a receptive pathway and compresses it into a kinetic intent.

- A low-level controller produces the next action given the current state of the operator and this motor intent.

After training, the low-level controller can be reused to learn new tasks, and the high-level controller is optimized to directly output the engine’s intent. This enables efficient exploration—coherent behaviors are produced, even with random motor intentions—and constrains the final solution.

Coordination of the emerging team in human football

Soccer has been a longstanding challenge of embodied intelligence research, requiring individual skills and coordinated team play. In our recent work, we used NPMP as a precursor step to guide motor skill learning.

The result was a team of players who progressed from learning the skills of chasing the ball to eventually learning coordination. Previously, in a study with simple avatars, we showed that coordinated behavior can emerge in teams that compete with each other. NPMP allowed us to observe a similar effect but in a scenario that required more advanced motor control.

Our agents have acquired skills including fluid movement, passing, and division of labor as evidenced by a range of statistics, including metrics used in real-world sports analytics. Players display both high-frequency motor control and long-term decision-making that involves anticipating the behaviors of their teammates, resulting in coordinated team play.

Whole-body manipulation of cognitive tasks using vision

Learning how to interact with objects using the arms is another difficult control challenge. NPMP can also enable this type of whole-body manipulation. Using a small amount of MoCap data to interact with the boxes, we can train an agent to carry a box from one place to another, using egocentric vision and with only a few reward cues:

Similarly, we can teach the dealer how to catch and throw balls:

With NPMP, we can also tackle maze tasks that involve movement, perception, and memory:

Safe and effective control of realistic robots

NPMP can also help control real robots. Having a well-structured demeanor is crucial for activities such as walking over rough terrain or handling fragile objects. Jerky movements can damage the robot itself or its surroundings, or at least drain its battery. Therefore, significant effort is often invested in designing learning objectives that make the robot do what we want it to while behaving in a safe and efficient manner.

As an alternative, we investigated whether the use of biological motion-derived buds could give us well-structured, natural-looking, and reusable movement skills of legged robots, such as walking, running, and rotation suitable for deployment to real-world robots.

Starting with MoCap data from humans and dogs, we adapted the NPMP approach to train skills and simulated controllers that can then be deployed on real robots (OP3) and quadrupeds (ANYmal B), respectively. This allowed the user to direct the robots via a joystick or dribble the ball to a target location in a natural and powerful-looking manner.

The benefits of using neuroprobabilistic motor alternatives

In summary, we used the NPMP skill model to learn complex tasks with human characters in simulations and real-world robots. The NPMP packages low-level movement skills in a reusable way, making it easier to learn beneficial behaviors that are difficult to discover through unstructured trial and error. Using motion capture as a source of prior information, it biases motor control learning toward natural movements.

NPMP enables embodied agents to learn more quickly using RL; to learn more natural behaviors; To learn more about the safe, effective, and stable behaviors appropriate for bots in the real world; and combining whole-body motor control with longer-sighted cognitive skills, such as teamwork and coordination.

Learn more about our work: